Multimodal interaction integrates a variety of sensors to perceive the user and the environment to create a more holistic view of the situation. When coupled with a situated agent, like a robot or virtual agent, it allows the agent to have more situational awareness and be able to interact in a more natural way.

Robot Detection of Social Cues

A socially assistive robot helps a user through social interaction. To detect when and how it should assist a user, we detect social cues conveyed by the user. Using cameras, microhphones, and other sensors, the robot combines information to understand how much help the user needs.

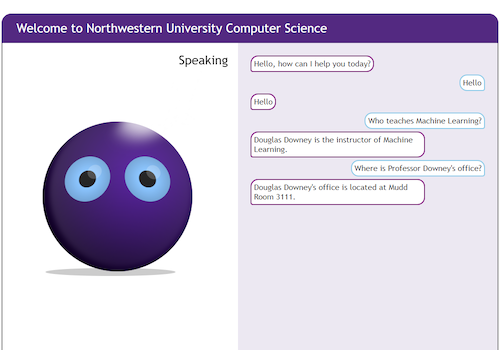

Kiosk

The multimodal kiosk answers questions posed in natural language. The kiosk user interface detects users, recognizes speech, and displays a virtual agent. To understand natural language and answer questions, the kiosk uses analogical reasoning and commonsense knowledge.

The kiosk has been deployed at Northwestern University since 2018.