Robots & Social Cues

understanding and presenting social cues

Detecting Help

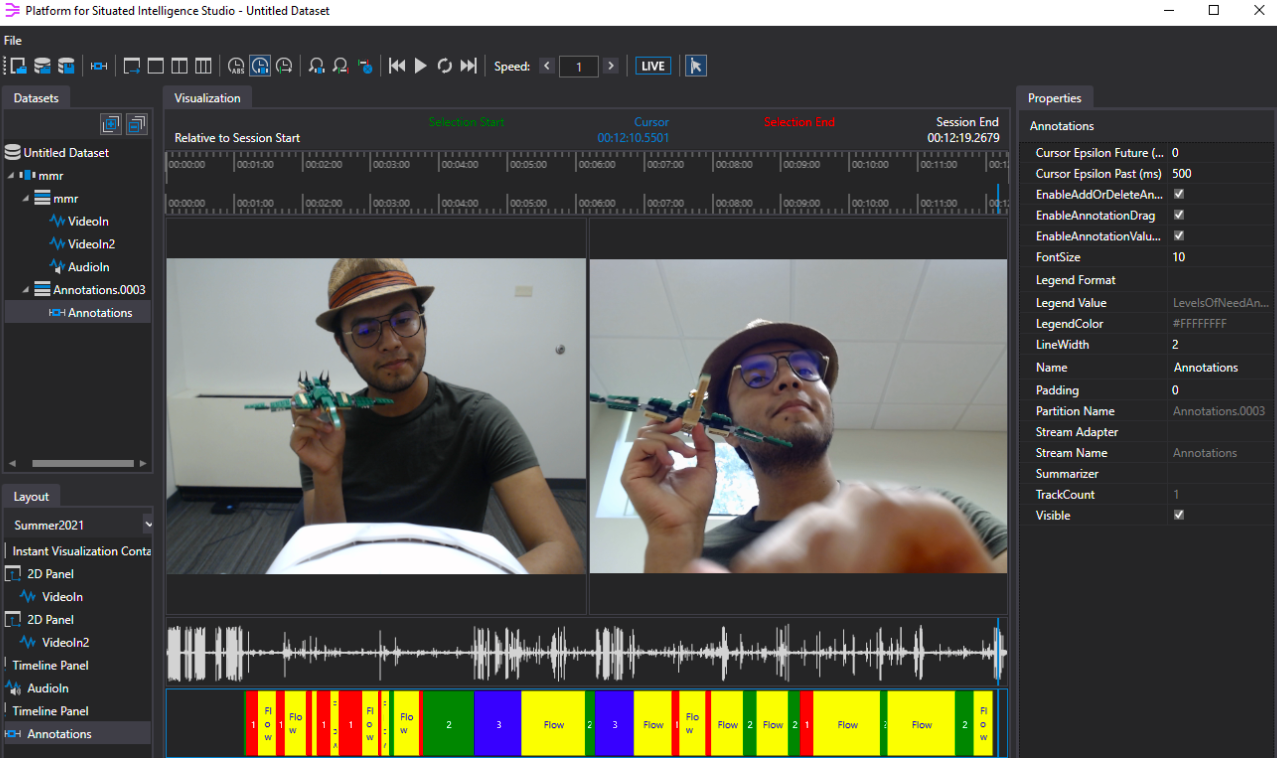

A socially assistive robot helps a user through social interaction. To detect when and how it should assist a user, we detect social cues conveyed by the user. The user may explicitly ask for help, but more subtle ways a user may indicate that they need help is through various eye gaze patterns. Using cameras, microphones, and other sensors, the robot combines information to understand how much help the user needs. (Wilson et al., 2022; Reneau & Wilson, 2020; Kurylo & Wilson, 2019; Wilson et al., 2019)

Building Rapport

In addition to a robot understanding social cues, it can use social cues to build rapport with the user. Our prior work revealed that gestures and verbal acknowledgments contribute to the development of rapport (Wilson et al., 2017). Future work will integrate this into our automatic generation of behaviors (see child-robot interaction project)

References

2022

- When to Help? A Multimodal Architecture for Recognizing When a User Needs Help from a Social RobotIn Proceedings of the International Conference on Social Robotics , 2022

2020

- Supporting User Autonomy with Multimodal Fusion to Detect when a User Needs Assistance from a Social RobotIn Proceedings of the AI-HRI Symposium at AAAI-FSS 2020 , 2020

2019

- Using human eye gaze patterns as indicators of need for assistance from a socially assistive robotIn Proceedings of the Eleventh International Conference on Social Robotics , 2019

- Developing Computational Models of Social Assistance to Guide Socially Assistive RobotsIn Proceedings of the AAAI Fall Symposium on Artificial Intelligence and Human-Robot Interaction for Service Robots in Human Environments , 2019

2017

- Hand Gestures and Verbal Acknowledgments Improve Human-Robot RapportIn Proceedings of the Ninth International Conference on Social Robotics , 2017