Four new papers

The CARES lab has 4 exciting papers to share!

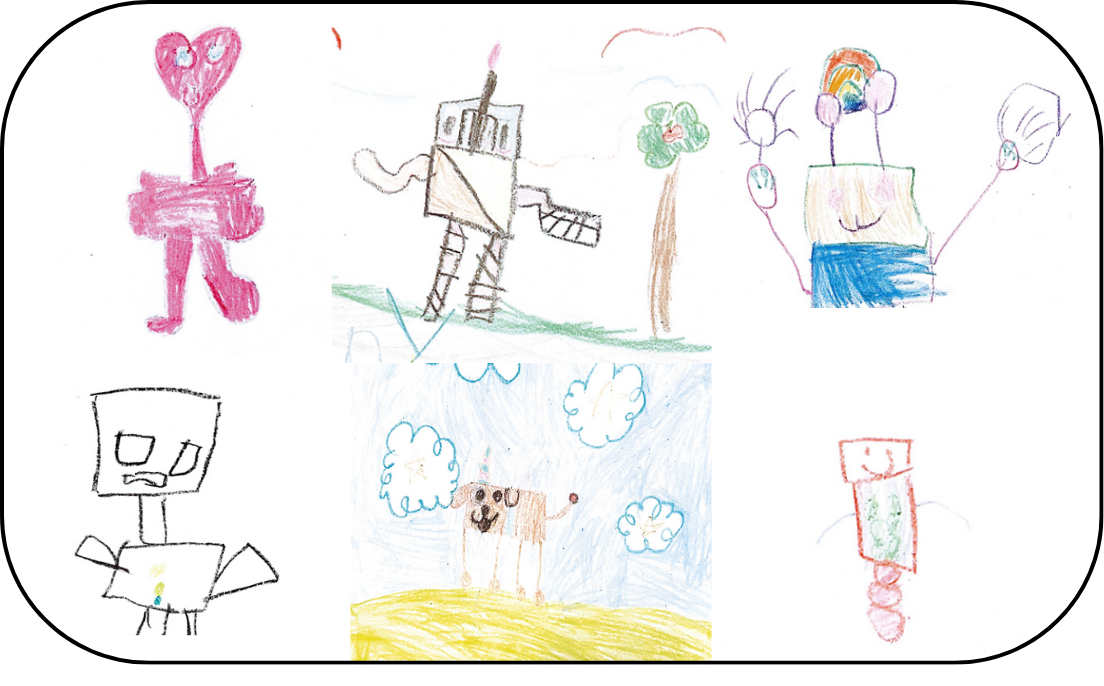

What does a Draw a Robot Task (DART) tell us about how children will interact with a robot?

In August, we presented a short paper exploreing how age and childhood exposure to technology influence DART responses. We also examine how DART results influence subsequent interactions with and attention to a real robot. We find a surprising lack of significant correlations between the DART and other measures, except in the oldest age group of children (7-year-olds). As such, we recommend using this task with older children or supplementing it with other implicit tasks to fully understand early robot perceptions.

How do we tell a robot what to do?

In September, we presented our work in collaboration with Peerbots on a multi-perspective approach to designing social robot applications. Designing autonomous robots, teleoperated robots, or robots programmed by an end-usere each present a tradeoff between some advantages and limitations, and there is an opportunity to integrate these approaches where people benefit from the best-fit approach for their use case. In this work, we propose integrating these seemingly distinct robot control approaches to uncover a common data representation of social actions defining social expression by a robot. We demonstrate the value of integrating an authoring system, teleoperation interface, and robot planning system by integrating instances of these systems for robot storytelling. By relying on an integrated system, domain experts can define behaviors through end-user interfaces that teleoperators and autonomous robot programmers can use directly thus providing a cohesive expert-driven robot system.

How do we design an inclusive approach to developing machine ethics curriculum?

Recently accepted to the Student Research Competition at SIGCSE TS 2025, F\&M student El Muchuwa descibes our pioneering approaching for an inclusive curriculum design process to broaden accessibility to machine ethics education. In collaboration with Lee Franklin at the F\&M Faculty Center, we are developing an approach that uses a “source” course to develop materials for seven “target” courses. The source course is a machine ethics curriculum development course in which students and faculty collaboratively build curricular materials for integration into non-computer science courses. Here we describe the development of the “source” course using a curriculum co-creation process that leverages student and faculty expertise. The process emphasizes an inclusive design approach, rooted in continuous stakeholder feedback and consistent, transparent communication. The products of this process include course materials that incorporate underrepresented ethical frameworks. Additionally, it features peer-reviewed journal assignments that promote reflective learning and sharing of diverse perspectives, as well as a final module project in which students collaborate with faculty to co-create curricular materials. Our approach aims to broaden a culturally relevant understanding of ethical challenges in technology while ensuring that the curriculum resonates with diverse student backgrounds.

What would be a more comprehensive evalaution of machine theory of mind?

Recently accepted to be presented at the ToM4AI workshop in March, we propose a new dataset that introduces new challenges by requiring explicit reasoning over beliefs and knowledge. In collaboration with Barnstorm Research and the Navy Research Lab, we argue that while the AI community has made incredible gains in modeling and simulating theory of mind (ToM), there is a new for ToM evaluations to go beyond predicting an agent’s intentions based on observations of their actions. Our new Tangram dataset represents observations of a child assembling a tangram puzzle with the assistance of a human or robot instructor and interventions that the instructor has made to assist the child. Due in part to the intricacies of this task, the dataset supports evaluating ToM capabilities related to goal recognition, plan recognition, and false belief reasoning. Additionally, the dataset supports investigation into how ToM reasoning connects to the subsequent actions of the ToM reasoner. Altogether, the Tangram dataset has qualities that will help push forward AI for ToM.

References

2025

2024

Enjoy Reading This Article?

Here are some more articles you might like to read next: